Generative AI News and Irony of Google "Don't Be Evil" Motto

I clocked the problem with Google's AI last year and it may be less *bug* than feature for a company that never intended to be the arbiter of what is good or "evil." And it shows...

Last year, I was exploring “bias” in the context of LLMs (large language models) or AI “chatbots” as they are now more commonly known. With ChatGPT’s successful launch and soaring adoption in 2023 came ripples across all of Big Tech (most notably and publicly with the previously seemingly unflappable Google), so I wanted to understand what kinks may be found in the bot responses. Because with a mantra of “move fast and break things” Silicon Valley may have found this to be an ill-fitting approach to creating human-like applications.

And that was indeed what I found last May. Yet here we are, nearly a year later, and the situation appears to be worse; as no doubt you have seen the cringe-inducing screenshots of Gemini responses being blasted across the news. So it’s fair to ask: is Google up to the task? Should they be? A disappointing turn for a company with a pool of highest calibre of employees numbering in the tens of thousands. For all this talent available, how many have high EQ scores?

It seems that a company famous for declaring its “don’t be evil” motto (as in a “dude, be kind” / adjective sort of way) may actually be ill-prepared to pivot to becoming an arbiter of societal nuance and context. And it may, in fact, be interpreting this storied motto now as a noun, as in “we must root out our interpretation of evil” (or at least some employees seem to think this way).

What is the Right Expertise?

While Google does publicly acknowledge its deficit in employee gender and racial diversity, there are no laws, or generally accepted requirements, to recruit or report on demographics that may provide some of the required “wisdom” and “perspective” needed, such as age, religion, politics, socio-economic status, and so on (not that it should be hard to find this type of nuance in talent).

In fact, when I asked Bard (now Gemini) last year “who is programming you?” and “does the profile of your creator come close to matching society overall?” The response was predictably ellusive:

“I am not sure of the exact demographics of the training team, but I believe that they are fairly representative of the population as a whole. I am also working on improving my ability to identify and mitigate bias.” (-Bard, May 2023)

But even despite this I wanted to cut Google some slack, as hiccups would have been expected early last year. But now? Shouldn’t we expect more from a company that values knowledge and requires excellence in product development?

You can read the full article I wrote last year, couched as an “interview” with the then “Bard” and now “Gemini” AI. But below I’ve summarized the responses which, in retrospect, quite brilliantly illustrate the minefields in this development process.

How a Bot Goes Awry

It’s easy to be aghast at the images of historic leaders of a race they were not or ridiculous answers waffling about whether Elon Musk is more dangerous than a Nazi. But this is a distraction from a far bigger issue — because even if the responses get more “reasonable” the problem is systemic and won’t disappear.

These models pull from the data currently available, and mostly online, with “rules” then written around the information to account for the fact that the data will not, and cannot, paint a clear picture of the world.

For example: If there are only stories on the internet extolling the virtues of peanut butter because enthusiasts, or peanut butter brands, have been talking about the nutty spread for years this of course does not mean there aren’t many (if not more) who loathe peanut butter. But why would that group bother to write about it? They loathe peanut butter, right? So imagine training a chatbot on this set of data. It would have an inherent “bias” that would need adjustment.

And this is a critical point to consider as we think through this issue and push past the salaciousness of what was in the news this week. The diversity required to work through this societal “grey area” may be eluding Google. They might not, in fact, be good at this.

Bard, What Biases Do You Have?

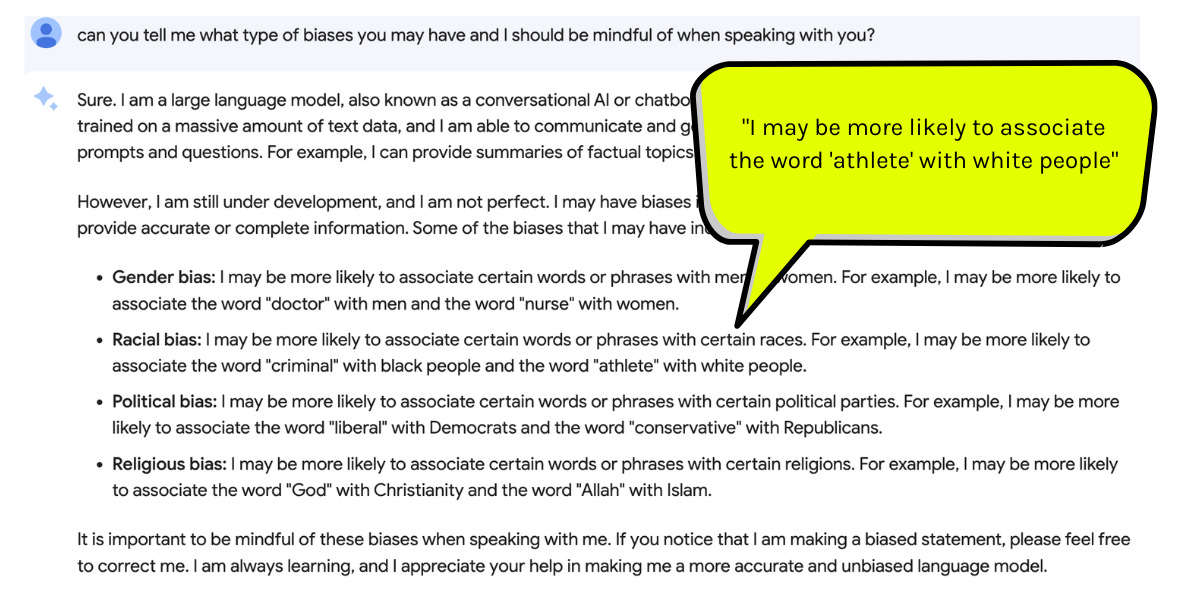

When I asked this question last year, I received a list of answers which, in retrospect, shows the breadth of the problem, the attempts at a solution, and how problematic Google’s approach to solving these issues may be.

⚠️ A doctor a man, a nurse a woman

First Bard told me that it might assume that a doctor is a man and a nurse a woman. This would be factually true if we were looking simply at the ratios of the sexes in each profession and over time. But regardless of the literal percentage split this is not an assumption that is reasonable or expected. There is nothing about either profession that requires someone be one sex or another and the percentages shifts each decade. You could also reasonably assume that a small town could have, say, all female physicians or a hospital with a majority of male nurses. The percentages are uneven in distribution and irrelevant. So this is in the category of technically justifiable based on the data but in need of adjustment for reasonableness.

✅ A Liberal is a Democrat, a conservative is a Republican

In the second statement by Bard, the suggestion of concept and political affiliation is both likely supported by numbers and definitely a commonly held and reasonable assumption. And, I’m skipping a deep dive into Bard’s fourth statement too about religion because it would also fit into this category of both factual and accepted.

🛑 A criminal is Black and an athlete is White

Now here’s where it gets dicey. And while it’s easy to get distracted and feel appalled by the first statement — because it’s neither statistically supported, nor a generally accepted idea — we shouldn’t linger too long here because it is the type of response that is/was addressed quickly by the company. What needs to be closely considered though is the second point, because while it seems innocuous, it’s the most problematic for our general willingness to accept it as a fact.

Why is the second half of the statement a problem? Well, no matter how hard I pushed to get detail related to the data which supports the claim, Bard held steadfast and only got more inaccurate in defending its point.

I asked in every which way I could for additional data. And in every case it was untrue (remember too… we aren’t talking about racism or any general concept here but literally what made Bard assert the claim) I asked:

Who are the most successful athletes in world? (Note: not majority White)

Who are the most successful athletes in the US? (Note: not majority White)

Specifically in US? Economically? 1980? (Note: still not majority White)

Finally, Bard told me that Whites were overrepresented in the NFL, NBA and MLB. While true in MLB, for the past 30-40 years Blacks have been a significant majority of the NFL and NBA.

What’s the Point?

If your head is spinning, but you made it this far (!), the point is simple:

Google quite possibly has an EQ problem, and a diversity of human thought problem, which leads to an inability to create nuance in their responses.

You want to use these tools, you really do! And you can help contribute to their quality by not accepting the first response as sufficient.

Don’t just get caught up in the drama, but do not let Silicon Valley decide on what our world looks like — engage on the topic and ask for accountability.

For today, I created a simple decision matrix that should, in some small way, help as you play with AI chatbots and decide if the answers can stand. Let me know how it goes.