We Need a Food Pyramid for Technology

Technology is now as much a part of our lives as the food we eat. Yet while we have nutritional guidance for food, we're drowning in tech “empty calories” with no clear framework to guide us...

If you think about it, our relationship with food and with technology have a lot in common. While the former is core to our actual survival, the latter is increasingly key to our ability to thrive at work, school, and at home.

We have a lot of choice in what to eat. And similarly, the options for technology to explore are bountiful. In both cases we also rely on scientists, specialists, and other experts to ensure we have food to eat and technology to use.

But here’s where things start to diverge: We are exceptionally confident when advocating for what we think is “good” to eat, but then err on the side of caution when declaring what technology we think is “right.”

In both cases we have a choice, and yet there seems to be an inherent contradiction in how we approach our power to control each. I called this out in a video I created last year…

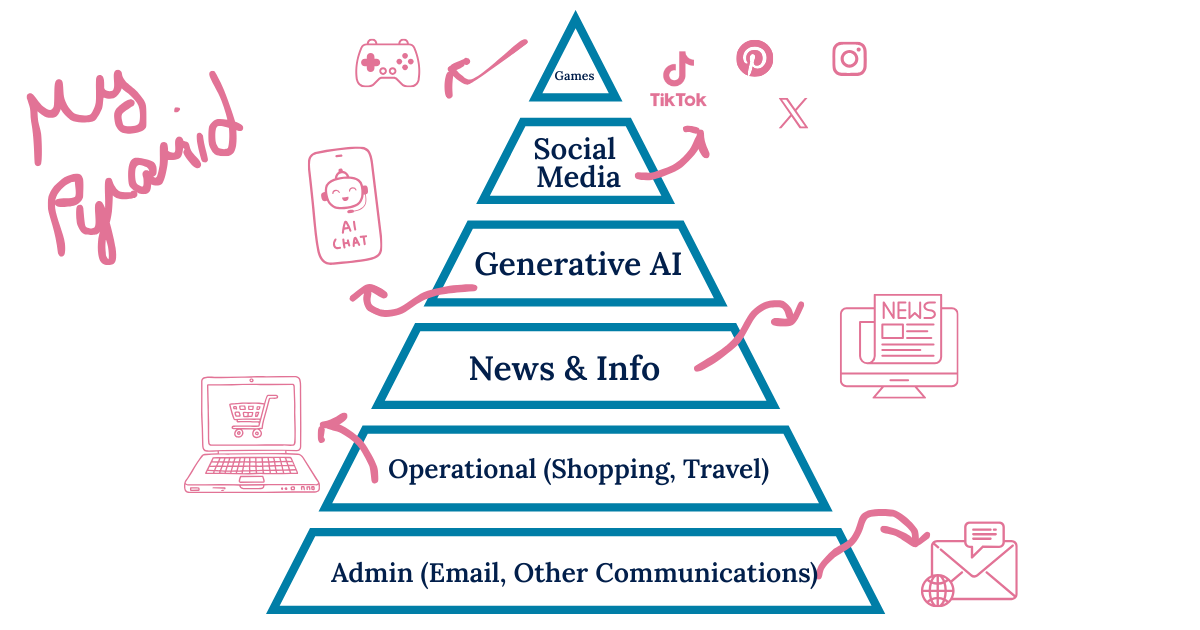

A Food Pyramid for Tech

When it comes to food, consumers are provided with “guidance.” We are provided with research, information, and suggestions, allowing us to then customize and advocate for ourselves and our families.

What tends to be missing from the endless news and chatter around technology bans today (with social media being the most notable) is the bigger conversation around how we think about our overall tech consumption. Just like with food, if we are consuming everything in sight mindlessly, targeting one type of technology or platform simply does a disservice to our overall “health.”

The numbers tell this story of technology overload. According to recent research, the average person has approximately 80 apps on their phone, using about nine a day and 30 each month. And when it comes to schools it’s even more eye-opening: According to Education Week, during the 2024-25 school year, districts accessed an average of 2,982 distinct ed-tech tools annually and 1,574 tools monthly.

We only have so much mental capacity available to us and should be using it far more wisely.

Making it Personal

Now, the catch with creating a “tech pyramid” is that it has to be personal. We must decide for ourselves what will work best and where the lion’s share of our time should be spent.

Personal agency isn’t just at the core of how to decide what technology is good for us—it’s also key to using tools such as generative AI. Creating this type of visual is a great way to talk to our kids and give them a sense of ownership and control.

What are your thoughts about regaining some sense of control here? Let me know!

In Other News

OpenAI Takes a Better Approach to Age Verification

As I’ve written about previously, age verification via image capture of kids is problematic. Our data is increasingly valuable to criminals, with high-quality digital capture of children topping the list of data to steal. Sometimes it’s necessary for adults to use image-based identification, but we should avoid it for children.

That’s why I found OpenAI’s recently announced approach worth celebrating. They will be using their own AI technology to determine whether a user is likely under 18. If an adult is incorrectly categorized, then the onus is on them to verify their age.

Guardrails for kids are important, and this is a small tweak to an approach that can go far toward providing even greater protections.

Anthropic Delivers its “Constitution”

One of the biggest challenges with generative AI is psychological. When using a tool that interacts with us in a “human” way, we need to remind ourselves to, first, think independently and not be coerced by the machine’s “confidence” in its responses. We also need to remember that there is a person behind the screen programming a framework for those responses.

All of this makes Anthropic’s newly updated “constitution” particularly interesting. It’s the type of information we need to determine whether Anthropic’s approach in creating Claude is one that we agree with.

The document is long, and some might feel heavy-handed, but it provides insight into the decisions being made behind-the-scenes. More importantly, it highlights how important it is for consumers to understand AI governance and why there will be a role in the future for those who study philosophy, ethics, sociology, and psychology.

Upcoming Events

I’ve got some fun events in the works and look forward to sharing the details with you. The next one is in February in NYC, details to come. To learn more you can sign up for updates here….