Welcome to The (AI) Outliers

How do we prepare for the age of AI? We hold tight to what makes us human and continue to tell the stories of remarkable, and uniquely "human," feats...

How do we prepare our kids for a future that will be dominated by AI? Well, if you are a subscriber, or have checked out my book AI for Families: Ultimate Guide to Mastering AI, you’ll know that I believe we do this, in part, by recognizing, cultivating, and holding dear those aspects of our humanity that make us special.

Once we know WHO we are it’s much easier to decide HOW machines can enhance our lives and WHERE the lines should be drawn concerning our use of AI systems.

What’s at Risk?

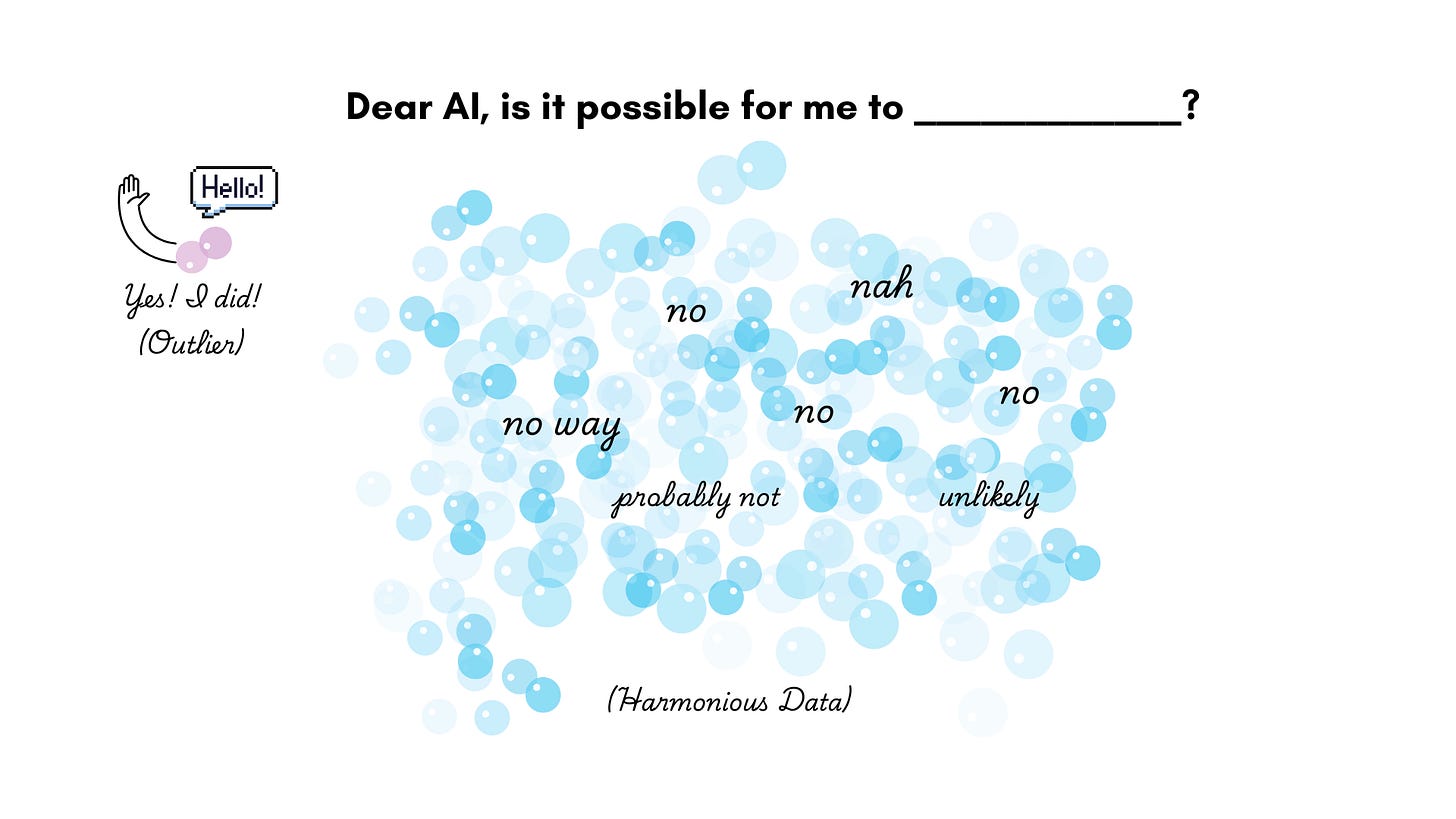

One of the unintended (and, I believe, least discussed) risks of AI is how it’s incentivized to deliver a response that is “safe.” It goes for the “most likely” or “most provable” answer. Of course, that makes sense. But are we fully considering the implications of safe and “homogenous” as we pepper AI into absolutely everything? Probably not.

Just look at what’s happening with AI-enabled Internet search results. You might be looking for a variety of perspectives and opinions when you search on Google, but now quite often the AI results are curt and binary in their “answer.”

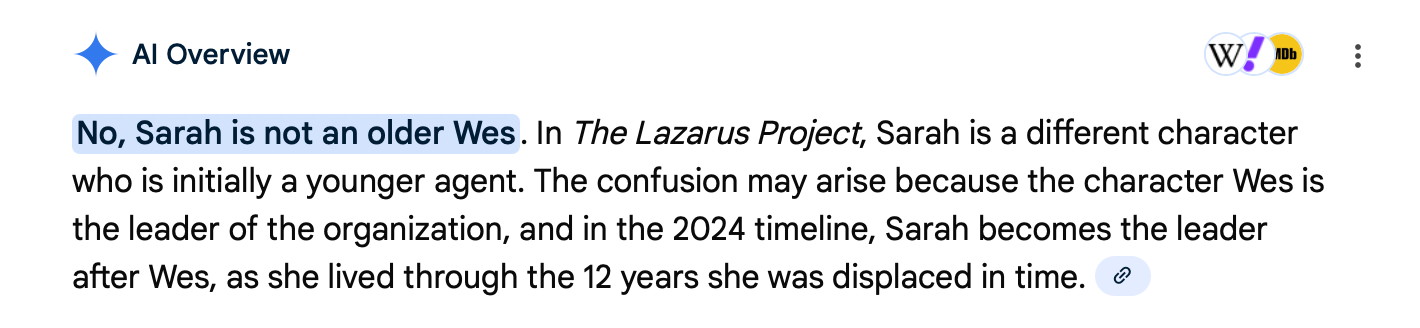

For instance, I wanted to see what “people were saying” about the ending of a TV series I loved (The Lazarus Project). I wondered who also may have theorized about the relationship between two of the characters. And, welp, Google’s Gemini cut me right off. “No” it said. End of story.

It’s inexplicable why Google has decided to insert Gemini in this way, but that’s a post for another day. The bigger issue is how many might end their journey of discovery when an AI system offers such an abrupt response.

(P.S. hate AI intrusion into your Google search experience? When you add a phrase or question in the search bar add “-AI” and you won’t see an AI-generated answer)

Who Are The “Outliers”?

In my book I talk about the fact that boring, safe, and homogenized AI responses can (if we let them) start to shape the boundaries of what we think is possible—especially for kids.

When an AI chatbot responds with such authority that something is impossible, never done, or just simple provides a “yes” or “no” answer, it can be a serious deterrent to imagination, critical thinking, and any understanding of nuance.

Or, even worse, the answers can be inaccurate and limiting (especially as these responses are simply based on what has been written about previously).

And this is where “outliers” come into the picture. If humans and our digital data underpin these “definitive” AI responses, then it is far tidier algorithmically to just remove any errant data points from the mix (i.e., that “one person” who might have credibly argued the same theory I had about the Lazarus Project).

If there are no “examples” or content related to questions, ideas, or queries, what then? Imagine how many people have done extraordinary things that defy convention or belief but then “disappear” because the story never made it into the Internet content that drives these AI responses. That should worry us more than the “machines will destroy us” narrative we hear most frequently right now. We are at a far greater risk of handing our humanity over to technology and becoming a self-fulfilling prophesy as a result.

How Stories of “Extraordinary” People Begin to Disappear

If our news is AI generated, and our education materials led by AI, how do we resist the urge to think “within the box” rather than outside of it? Do we wake up someday and realize we are all boring, prone to group think, and risk adverse because “AI taught us” to be this way? Do we think the world can simply be organized into “yes” and “no,” “true” or “false”?

As readers you know I think that AI innovation can help us achieve great things. But if we don’t understand what is currently underpinning the technology we will fall prey to its darker implications.

That said, it’s not the technology that is to blame but the humans involved in its development and the “ingredients” that underpin AI’s output. For example:

Local news is disappearing. According to my graduate school alma mater, over 40% of local news outlets have dissolved in the past 10 years. When you consider that AI responses come from Internet content and that local news is where we tend to find the most “human” stories, the implications are signifiant. All of those “local heroes” and individual human-interest stories that aren’t feasible to cover in the same way nationally, start to disappear.

We Participate in fewer community events. According to the 2024 American Social Capital Survey, just 44 percent of Americans report attending a social event in one’s community even just a few times a year. It’s those interactions that expose us to a wider variety of people doing unique, interesting and even great things. It’s the very basis of our understanding of other people and what’s possible.

Social media algorithms favor commercial (and other) interests. On the flip side, we spend so much time online that we experience the world according to algorithmic equations that prioritize commercial interests over truth. We are already prone to say “everyone thinks” these days without recognizing that “everyone” might not actually be…everyone.

Introducing “The Outliers” Special Section

And here is how we find ourselves at my new AI for Families section. As a trained journalist I always return to the mandate of “showing” not “telling” readers. And I can think of no better way to show readers what’s at risk these days than in profiling some very extraordinary human beings.

So to supplement the ideas, research, tips and overall thinking about AI that I cover on this website, I am now going start profiling the types of people who defy expectation as a celebration of what we can achieve and a warning as to what may lose if we don’t pay attention.

Know of someone that deserves a shoutout? Let me know!