We Can't Debate AI in Schools if We Aren't Clear About the WHAT, WHY, and HOW

Without a definition of the type of AI we are talking about in edtech and school discussions, or a well-defined articulation of family concerns, it's hard to have a meaningful debate...

Most people associate “AI” with recent generative tools like ChatGPT. But in reality, AI is part of just about every platform and tool we use today. In fact, machine learning, automation, recommendation systems, and predictive analytics have all been quietly powering the majority of consumer technology platforms for more than a decade—including in schools.

If we want to ensure that teachers have the best tools available and students are safely protected by existing laws and regulations, the focus should be on ensuring that all edtech systems, including those employing AI systems, operate with strict data minimization, regulatory compliance, and full transparency around processes.

Today’s debate often treats AI as a single, novel, high-risk category, when in reality schools already rely on a wide range of data-driven systems that use similar underlying technologies. Conflating these tools under one label obscures the meaningful differences that exist in purpose, risk, data sensitivity, and educational value, making it much harder to apply the targeted safeguards that student privacy laws were designed to support.

What’s needed is strong vetting, shared accountability for data privacy, and enforcement of the regulations that already exist. Beyond that, teachers need the training and support necessary to evaluate these tools effectively.

But all of these discussions become much more challenging when we aren’t working with a clear definition of what technology is being used and how AI may be part of a given tool or platform…

The WHAT: Defining and Categorizing AI

A common misconception is that AI only refers to the most advanced generative systems students encounter today. In reality, machine learning, rule-based automation, recommendation engines, predictive analytics, and adaptive learning algorithms have been used in classrooms for over a decade.

These technologies power student information and learning management systems, testing platforms, reading and math apps, behavior-tracking tools, and attendance monitors. Many “non-AI” apps also collect detailed interaction data that is later used for internal analytics, product design decisions, or future algorithm development—all still governed by education data privacy laws.

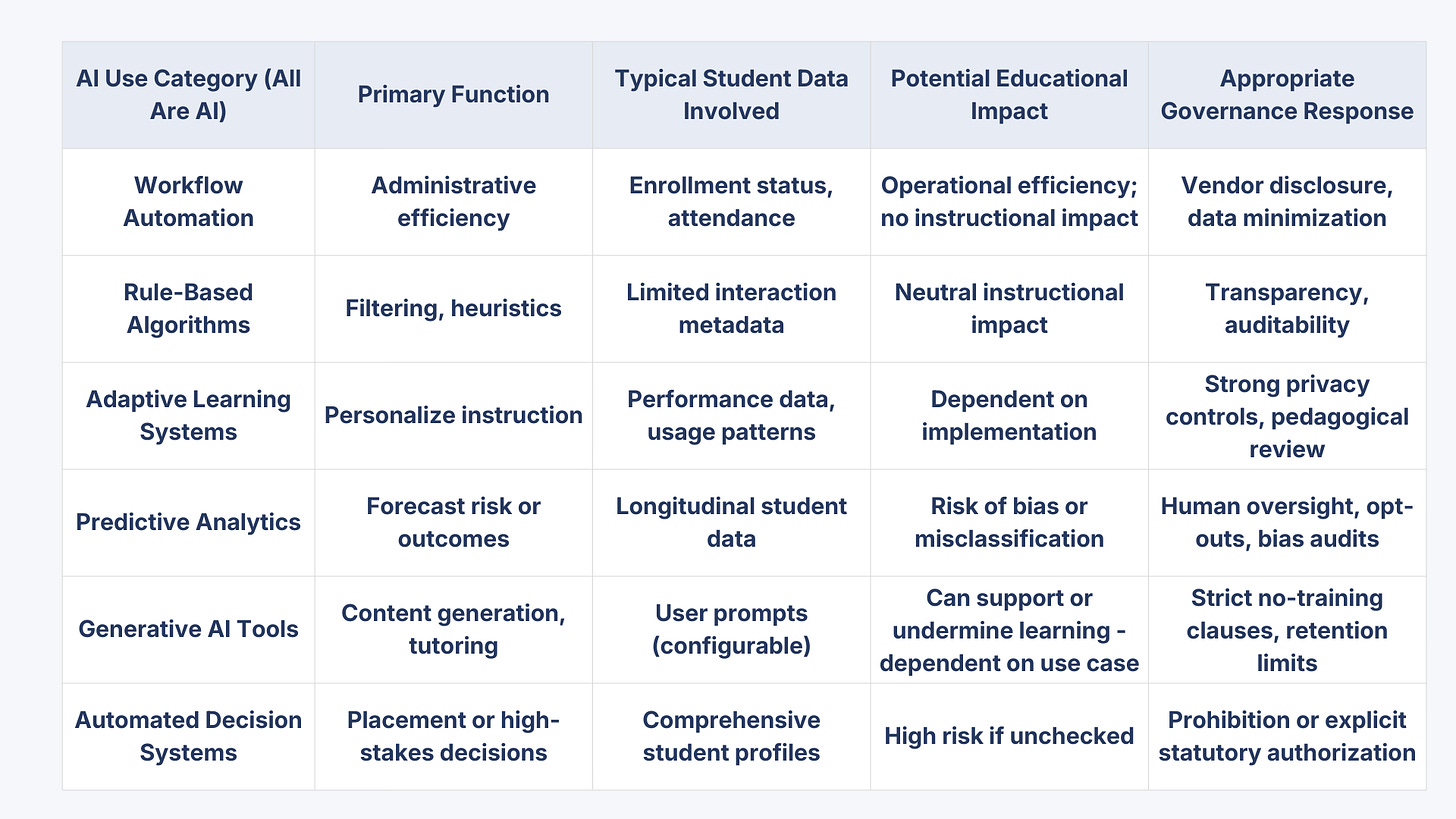

We’d be much better off categorizing AI in schools based on risk, pedagogical value, and other criteria focused on educational need rather than the underlying technology alone. A practical framework might look like this:

The WHY: Context Matters

Technology is only as good as its application and the context in its use. This is why any technology in the classroom should be considered based on what it does for students and teachers not simply because it employs a particular system.

For some students, AI provides the opportunity to be with their peers through accessibility features like text-to-speech that helps dyslexic students access grade-level content, real-time captioning for deaf and hard-of-hearing students, or predictive text that supports students with motor difficulties in expressing complex ideas.

For others, AI-driven technology helps with language learning through adaptive pronunciation feedback or reading support through personalized phonics instruction that adjusts to individual pacing.

In a math classroom, an AI tutoring system might provide just-in-time hints that help a struggling student work through problems independently, while an adaptive assessment platform may identify specific concept gaps without requiring additional testing time.

In all cases, educators should be making informed decisions about these tools and maintaining clear communication with students about how and why they’re being used. The privacy and safety of these tools shouldn’t be an afterthought—they should be foundational requirements.

HOW: Laws are Technology Agnostic

As data underpins all AI innovation in schools, vendors should be scrutinized for their data protection standards, and all regulations need to be enforced. But, again, that should be true of any technology.

Data privacy in schools is both critical and severely under-prioritized. The conversation should not be limited to “AI” as a monolithic threat. The reality is that schools already rely on an enormous ecosystem of digital tools, many of which quietly collect, process, or share student information every day.

Generative AI is simply the most visible new layer on an already complex privacy landscape.

Schools already have many processes in place around procurement, data privacy, parental consent, curriculum review, and teacher oversight. What we need is to ensure those existing processes are updated to address new concerns raised by AI, like data use, transparency, and human oversight. That requires isolating specific risks and addressing them directly.

The question is not whether a tool uses AI somewhere in the background. The real issue is how much student data a system collects, what inferences it makes, and whether it affects student opportunities. Data risk increases as tools move from automation to prediction to decision-making—and oversight should scale accordingly.

AI is Inevitable, Confusion is Optional

Once we get clear about what we are actually talking about when we say “AI,” families, educators, and policymakers can exert real influence over how these tools are governed and used in schools. That influence depends on precision—not fear, nor blanket opposition.

Concerns about student data misuse, legal compliance, instructional quality, and unintended consequences are valid and deserve serious attention. But data practices are a matter of policy and contract, not technical destiny.

The question facing schools is not whether AI will be present; it already is. The real question is whether communities will engage thoughtfully enough to shape outcomes that reflect their values. This requires shared definitions, transparency about how tools are used, and good governance frameworks.

The goal is not consensus for its own sake, but a common baseline for discussion—and one grounded in clarity about the WHAT, the WHY, and the HOW. From there we absolutely should engage in robust debate.

There are no clear or easy answers when it comes to technology innovation, but the only way we get to meaningful solutions and consensus is through debate in good faith and on equal grounds.

A reminder that there are some useful worksheets and other tools in the resources section with more to come this month!

Have an idea for tools you need? Get in touch! And books in the mail for those who requested a review copy!